Lightweight Linux Containers in Containerlab

When I was labbing back in the days on GNS3, all I needed to test my routing was another router. That networking device sole purpose was to ping accross the network. I just had to create a default route pointing to the upstream router and I could ping (if my lab was working.)

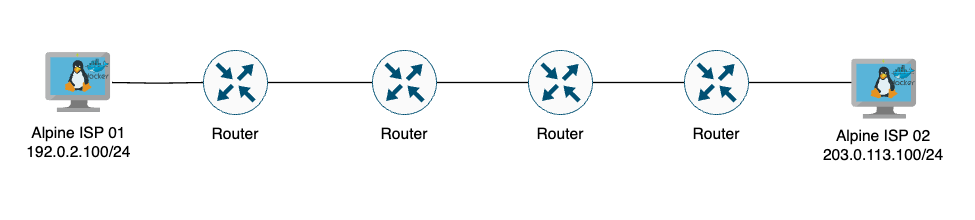

Nowadays and with infrastructure as code, I don't want to spend time configuring IP addresses on routers and preserve as much resources as I can. I have decided to use 2 containers that will be configured with the same IP addresses for every single lab: 192.0.2.100/24 and 203.0.113.100 .

These 2 IP addresses belong to subnets reserved for documentation as mentionned in RFC5737

I did it by creating two lightweight containers with a few network tools that are useful for my daily lab operations (Just realized I could add scapy to the container as well):

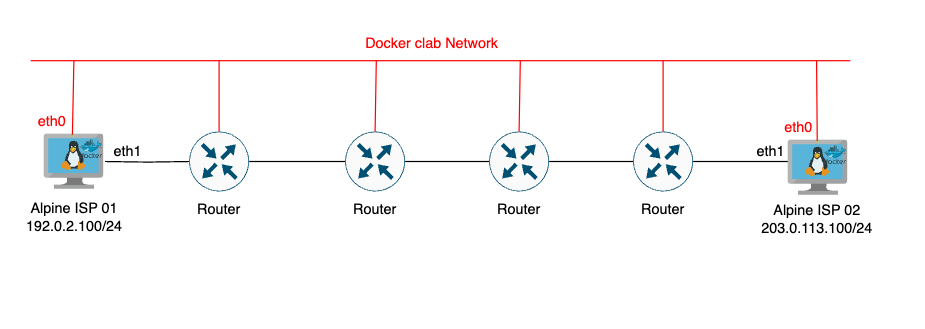

All of my labs will have these 2 containers so I can test various things using very little resources. The docker images are 38MB and booting them is almost instant.

Usually I leave Eth0 for management and Eth1 for the datapath of my actual lab.

Here are the dockerfiles.

1FROM alpine:latest

2

3# Label the image

4LABEL maintainer="nicolas@vpackets.net"

5

6# Update package list and install network tools

7RUN apk add --no-cache bash \

8 iputils \

9 iperf3 \

10 mtr \

11 bind-tools \

12 netcat-openbsd \

13 curl \

14 nmap

15

16COPY network-config.sh /usr/local/bin/

17RUN chmod +x /usr/local/bin/network-config.sh

18# Set the default command to launch when starting the container

19# CMD ["/usr/local/bin/network-config.sh"]

20CMD ["sh"]

When the image is being built, it will copy the network-config.sh that needs to be in the same folder as the Dockerfile (example for Container-ISP-01):

1#!/bin/sh

2

3

4ip addr add 192.0.2.100/24 dev eth1

5ip link set eth1 up

6ip route add 203.0.113.0/24 via 192.0.2.1 dev eth1

and this is the network-config.sh script for the Container-ISP-02:

1#!/bin/sh

2

3

4ip addr add 203.0.113.100/24 dev eth1

5ip link set eth1 up

6ip route add 192.0.2.0/24 via 203.0.113.1 dev eth1

These networking-script will be ran when the container will be launched, thanks Containerlab

You can pull the image directly from the docker hub registry here:

1docker pull vpackets/alpine-tools-containerlab-isp-01

2docker pull vpackets/alpine-tools-containerlab-isp-02

Here is an example if you want to launch that linux container in a containerlab yaml topology:

1name: eBGP-c8K

2

3topology:

4 nodes:

5 linux01:

6 kind: linux

7 image: vpackets/alpine-tools-containerlab-isp-01:latest

8 exec:

9 - sh /usr/local/bin/network-config.sh

10

11 linux02:

12 kind: linux

13 image: vpackets/alpine-tools-containerlab-isp-02:latest

14 exec:

15 - sh /usr/local/bin/network-config.sh

16

17 Cisco8201-1:

18 kind: cisco_c8000

19 image: 8201-32fh_214:7.10.1

20

21 Cisco8201-2:

22 kind: cisco_c8000

23 image: 8201-32fh_214:7.10.1

24

25 links:

26 - endpoints: ["linux01:eth1", "Cisco8201-1:FH0_0_0_0"]

27 - endpoints: ["Cisco8201-1:FH0_0_0_1", "Cisco8201-2:FH0_0_0_1"]

28 - endpoints: ["linux02:eth1", "Cisco8201-2:FH0_0_0_0"]

When you launch the lab and access the linux containers, this is what you can expect:

1vpackets@srv-containerlab:/home/vpackets $ docker exec -it clab-eBGP-CSR-linux01 sh

2

3/ # ip a

41: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

5 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

6 inet 127.0.0.1/8 scope host lo

7 valid_lft forever preferred_lft forever

8 inet6 ::1/128 scope host

9 valid_lft forever preferred_lft forever

1085: eth0@if86: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

11 link/ether 02:42:ac:14:14:04 brd ff:ff:ff:ff:ff:ff

12 inet 172.20.20.4/24 brd 172.20.20.255 scope global eth0

13 valid_lft forever preferred_lft forever

14 inet6 2001:172:20:20::4/64 scope global flags 02

15 valid_lft forever preferred_lft forever

16 inet6 fe80::42:acff:fe14:1404/64 scope link

17 valid_lft forever preferred_lft forever

1890: eth1@if89: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 9500 qdisc noqueue state UP

19 link/ether aa:c1:ab:a4:ab:cc brd ff:ff:ff:ff:ff:ff

20 inet 192.0.2.100/24 scope global eth1

21 valid_lft forever preferred_lft forever

22 inet6 fe80::a8c1:abff:fea4:abcc/64 scope link

23 valid_lft forever preferred_lft forever

24/ # ip route

25default via 172.20.20.1 dev eth0

26172.20.20.0/24 dev eth0 scope link src 172.20.20.4

27192.0.2.0/24 dev eth1 scope link src 192.0.2.100

28203.0.113.0/24 via 192.0.2.1 dev eth1

29/ #

Containerlab will automatically assign an IP on eth0 with a range of 172.20.20.0/24 and the custom script will take care of the eth1 configuration.

That way, I am able to identify these routes and subnets in my lab pretty easily and know which route belong to which host.

Happy labbing

PS : You can find other useful containers here